1 创建Vllm环境

安装默认版本的Vllm

conda create -n myenv python=3.10 -y

conda activate myenv

# 切换清华源

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

# Install vLLM with CUDA 12.1.

pip install vllm如果要安装指定版本的Vllm需要设置一下环境变量

# Install vLLM with CUDA 11.8.

export VLLM_VERSION=0.4.0

export PYTHON_VERSION=310

pip install https://github.com/vllm-project/vllm/releases/download/v${VLLM_VERSION}/vllm-${VLLM_VERSION}+cu118-cp${PYTHON_VERSION}-cp${PYTHON_VERSION}-manylinux1_x86_64.whl --extra-index-url https://download.pytorch.org/whl/cu1182 安装开源LLM模型

2.1安装

安装modelscope:pip install modelscope

2.2下载模型(GLM4-9b)

下载开源模型参考:魔搭社区模型下载

from modelscope.hub.snapshot_download import snapshot_download

model_dir = snapshot_download('ZhipuAI/glm-4-9b-chat', cache_dir='autodl-tmp', revision='master')- 第一个参数是模型名称

cache_dir是模型下载到本机的路径,例中的路径为/root/autodl-tmprevision是模型的版本

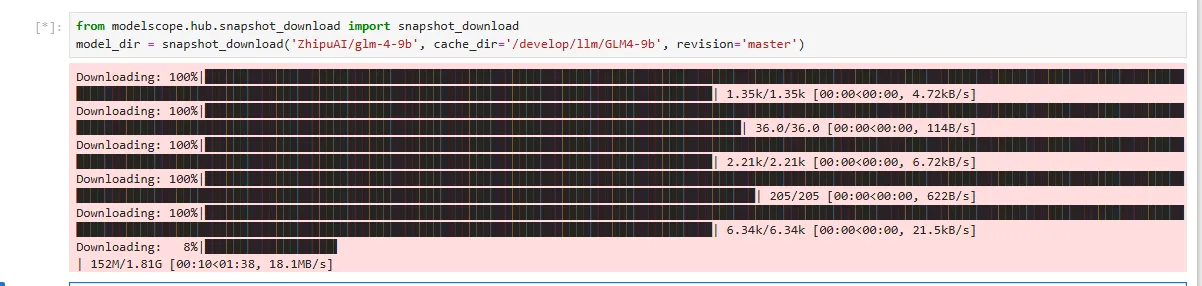

运行结果

2.3 启动模型

参考命令:

python -m vllm.entrypoints.openai.api_server --model /develop/llm/GLM4-9b/ZhipuAI/glm-4-9b/ --served-model-name glm4 --gpu-memory-utilization 1 --trust_remote_codemodel指定模型地址max-mode-len指定模型上下文长度(2048为2K的上下文长度)gpu-memory-utilization指定GPU利用率served-model-name指定模型名称,如果不使用此参数则模型的名称为mode参数的值api-key模型密钥- 更多参数 >>>

3 安装文本嵌入模型

3.1 下载模型(bge-large-zh)

from modelscope.hub.snapshot_download import snapshot_download

model_dir = snapshot_download('AI-ModelScope/bge-large-zh',cache_dir='/develop/embedding', revision='master')3.2 安装fastchat

因为vllm不支持Bert模型,因此如果选择使用bge-large-zh嵌入模型时,需要使用其他工具进行部署pip install fschat[model_worker,webui]==0.2.35

3.3 启动模型

python -m fastchat.serve.controller --host 127.0.0.1 --port 21003 &

python -m fastchat.serve.model_worker --model-path /root/autodl-tmp/bge/AI-ModelScope/bge-large-zh/ --model-names gpt-4 --num-gpus 1 --controller-address http://127.0.0.1:21003 --host 127.0.0.1 &

python -m fastchat.serve.openai_api_server --host 127.0.0.1 --port 8200 --controller-address http://127.0.0.1:21003- 第一个命令是启动控制器

- 第二个命令是启动模型

- 第三个命令是设置模型的外部访问地址

4 创建GraphRAG环境

4.1 安装GraphRAG

4.2 项目运行

创建项目预料输入:mkdir ./input

初始化项目:python -m graphrag.index --init --root ./

配置setting.yaml

encoding_model: cl100k_base

skip_workflows: []

llm:

api_key: ${GRAPHRAG_API_KEY}

type: openai_chat # or azure_openai_chat

model: glm4

model_supports_json: true # recommended if this is available for your model.

# max_tokens: 4000

# request_timeout: 180.0

api_base: http://127.0.0.1:8000/v1

# api_version: 2024-02-15-preview

# organization: <organization_id>

# deployment_name: <azure_model_deployment_name>

# tokens_per_minute: 150_000 # set a leaky bucket throttle

# requests_per_minute: 10_000 # set a leaky bucket throttle

# max_retries: 10

# max_retry_wait: 10.0

# sleep_on_rate_limit_recommendation: true # whether to sleep when azure suggests wait-times

# concurrent_requests: 25 # the number of parallel inflight requests that may be made

# temperature: 0 # temperature for sampling

# top_p: 1 # top-p sampling

# n: 1 # Number of completions to generate

parallelization:

stagger: 0.3

# num_threads: 50 # the number of threads to use for parallel processing

async_mode: threaded # or asyncio

embeddings:

## parallelization: override the global parallelization settings for embeddings

async_mode: threaded # or asyncio

llm:

api_key: ${GRAPHRAG_API_KEY}

type: openai_embedding # or azure_openai_embedding

model: gpt-4

api_base: http://127.0.0.1:8200/v1

# api_version: 2024-02-15-preview

# organization: <organization_id>

# deployment_name: <azure_model_deployment_name>

# tokens_per_minute: 150_000 # set a leaky bucket throttle

# requests_per_minute: 10_000 # set a leaky bucket throttle

# max_retries: 10

# max_retry_wait: 10.0

# sleep_on_rate_limit_recommendation: true # whether to sleep when azure suggests wait-times

# concurrent_requests: 25 # the number of parallel inflight requests that may be made

# batch_size: 16 # the number of documents to send in a single request

# batch_max_tokens: 8191 # the maximum number of tokens to send in a single request

# target: required # or optional

chunks:

size: 1200

overlap: 100

group_by_columns: [id] # by default, we don't allow chunks to cross documents

input:

type: file # or blob

file_type: text # or csv

base_dir: "input"

file_encoding: utf-8

file_pattern: ".*\\.txt$"

cache:

type: file # or blob

base_dir: "cache"

# connection_string: <azure_blob_storage_connection_string>

# container_name: <azure_blob_storage_container_name>

storage:

type: file # or blob

base_dir: "output/${timestamp}/artifacts"

# connection_string: <azure_blob_storage_connection_string>

# container_name: <azure_blob_storage_container_name>

reporting:

type: file # or console, blob

base_dir: "output/${timestamp}/reports"

# connection_string: <azure_blob_storage_connection_string>

# container_name: <azure_blob_storage_container_name>

entity_extraction:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

prompt: "prompts/entity_extraction.txt"

entity_types: [organization,person,geo,event]

max_gleanings: 1

summarize_descriptions:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

prompt: "prompts/summarize_descriptions.txt"

max_length: 500

claim_extraction:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

# enabled: true

prompt: "prompts/claim_extraction.txt"

description: "Any claims or facts that could be relevant to information discovery."

max_gleanings: 1

community_reports:

## llm: override the global llm settings for this task

## parallelization: override the global parallelization settings for this task

## async_mode: override the global async_mode settings for this task

prompt: "prompts/community_report.txt"

max_length: 2000

max_input_length: 8000

cluster_graph:

max_cluster_size: 10

embed_graph:

enabled: false # if true, will generate node2vec embeddings for nodes

# num_walks: 10

# walk_length: 40

# window_size: 2

# iterations: 3

# random_seed: 597832

umap:

enabled: false # if true, will generate UMAP embeddings for nodes

snapshots:

graphml: false

raw_entities: false

top_level_nodes: false

local_search:

# text_unit_prop: 0.5

# community_prop: 0.1

# conversation_history_max_turns: 5

# top_k_mapped_entities: 10

# top_k_relationships: 10

# llm_temperature: 0 # temperature for sampling

# llm_top_p: 1 # top-p sampling

# llm_n: 1 # Number of completions to generate

# max_tokens: 12000

global_search:

# llm_temperature: 0 # temperature for sampling

# llm_top_p: 1 # top-p sampling

# llm_n: 1 # Number of completions to generate

# max_tokens: 12000

# data_max_tokens: 12000

# map_max_tokens: 1000

# reduce_max_tokens: 2000

# concurrency: 32

生成知识图谱:python -m graphrag.index --root ./

5 可能存在的异常

- 运行时出现

- miniconda/envs/

${env_name}/lib/${python_version}/site-packages/graphrag/llm/openai/openai_chat_llm.py 第55行添加args['n'] = 1

- miniconda/envs/

- 注意LLM的上下文大小

- 日志文件在output文件夹下的

${id}/report.log

embeding模型报错,需要修改源码吗

2025-02-21 03:23:11 | ERROR | stderr | /usr/local/lib/python3.12/dist-packages/fastchat/serve/openai_api_server.py:136: PydanticDeprecatedSince20: The

dictmethod is deprecated; usemodel_dumpinstead. Deprecated in Pydantic V2.0 to be removed in V3.0. See Pydantic V2 Migration Guide at https://errors.pydantic.dev/2.10/migration/2025-02-21 03:23:11 | ERROR | stderr | ErrorResponse(message=message, code=code).dict(), status_code=400

2025-02-21 03:23:11 | INFO | stdout | INFO: 10.119.27.73:58276 – “POST /v1/embeddings HTTP/1.1” 400 Bad Request

先检查一下词嵌入模型的接口

就是这个接口因为报错里说的版本冲突无法正常运行,这种情况是由于版本更新导致的还是依赖版本有问题呢

应该是版本更新了